We're proud to provide Continuous Delivery services.

Mass.gov

Balancing content freshness and performance on Mass.gov.

Strategic optimizations transformed Mass.gov, delivering a 50% boost in back-end performance and a 30% improvement in front-end speed.

Balancing content freshness and performance on Mass.gov.

Massachusetts Department of Family and Medical Leave (DFML)

Elevating the PFML application experience for Massachusetts residents through testing.

Last Call Media developed a robust testing solution to streamline the Paid Family and Medical Leave (PFML) application process, minimize disruptions, and instill greater trust in government solutions.

The Commonwealth of Massachusetts

Improving the Drupal authoring experience.

Improving the experience to improve the content.

Improving the Drupal authoring experience.

The Commonwealth of Massachusetts

Massive performance wins.

Balancing fresh content and top-notch performance.

Massive performance wins.

The Commonwealth of Massachusetts

Paid Family and Medical Leave for the Commonwealth of Massachusetts

Thousands of people would need to access this state-offered benefit from the very first day it became available. Our mission was to ensure they would be able to rely on the technology making it possible.

Paid Family and Medical Leave for the Commonwealth of Massachusetts

Custom tailoring Haverford.edu.

Strategic pivot, design and development.

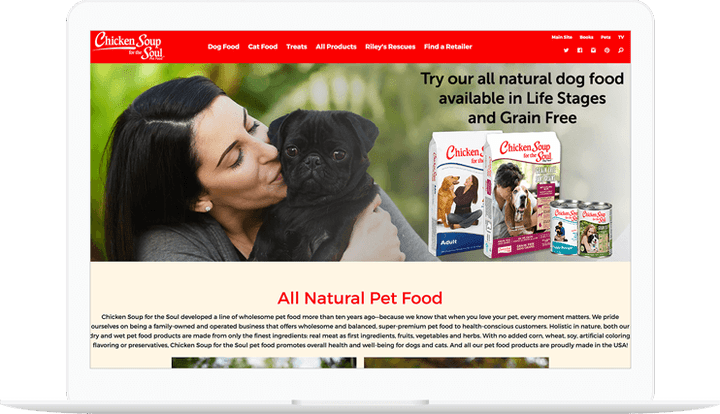

Chicken Soup for the Soul

Pet food microsite, built in two weeks.

Leveraging our Scaffolding and Drupal 8.

Pet food microsite, built in two weeks.

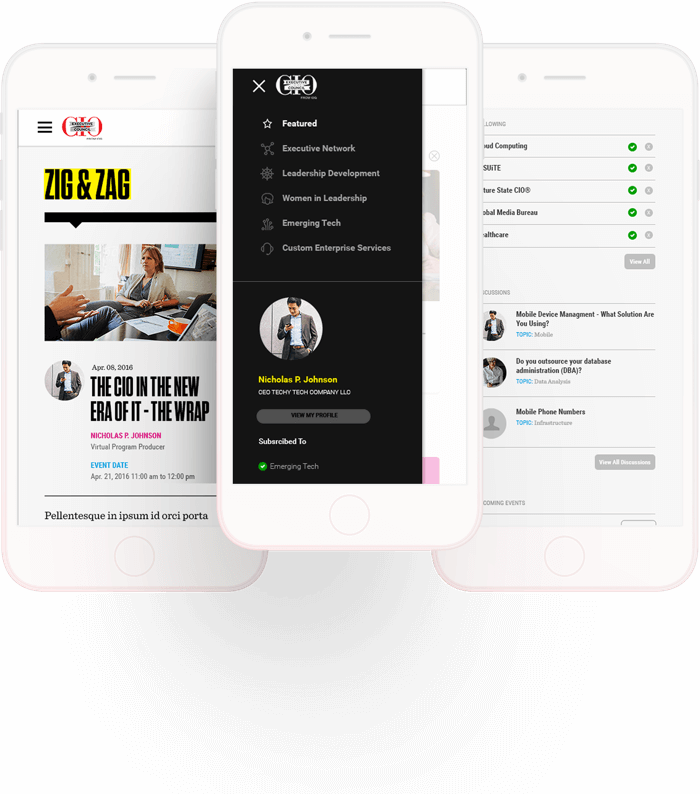

HIAS

Continuous delivery to HIAS.

Helping the oldest international migration and refugee resettlement agency in the U.S.

Continuous delivery to HIAS.

NYSE Euronext.