We're proud to provide services to our friends in Government.

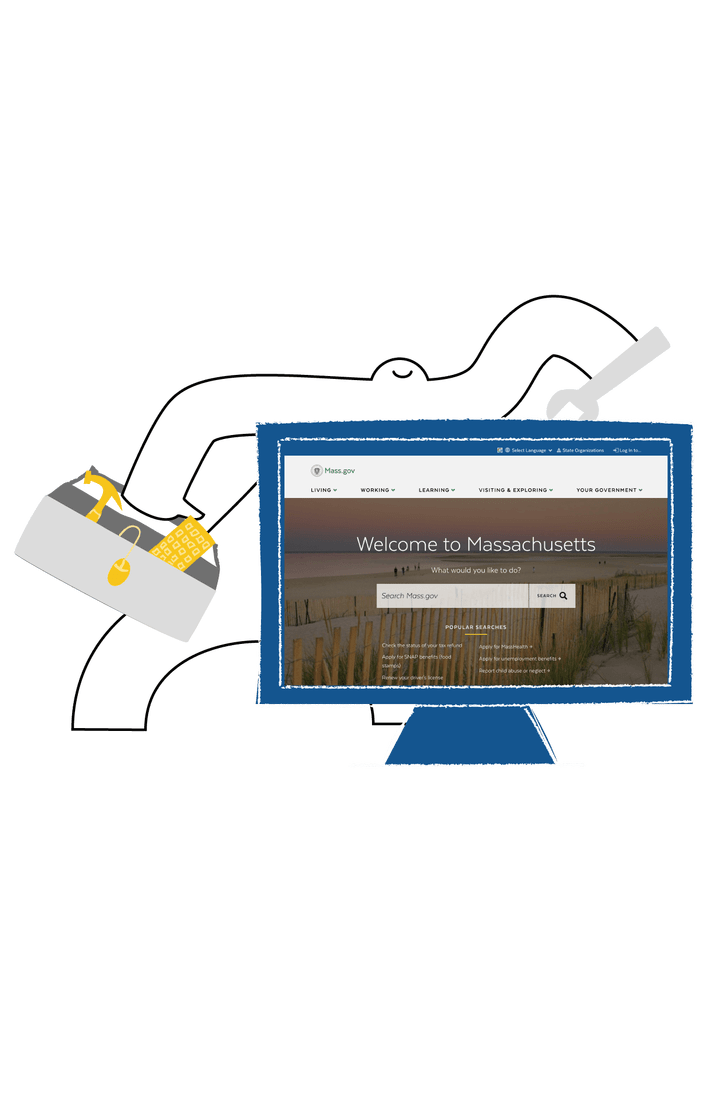

Mass.gov

Balancing content freshness and performance on Mass.gov.

Strategic optimizations transformed Mass.gov, delivering a 50% boost in back-end performance and a 30% improvement in front-end speed.

Balancing content freshness and performance on Mass.gov.

The Commonwealth of Massachusetts

Massive performance wins.

Balancing fresh content and top-notch performance.

Massive performance wins.

Downtown Northampton Association

Branding and print design.

Helping those who help their communities.

Branding and print design.

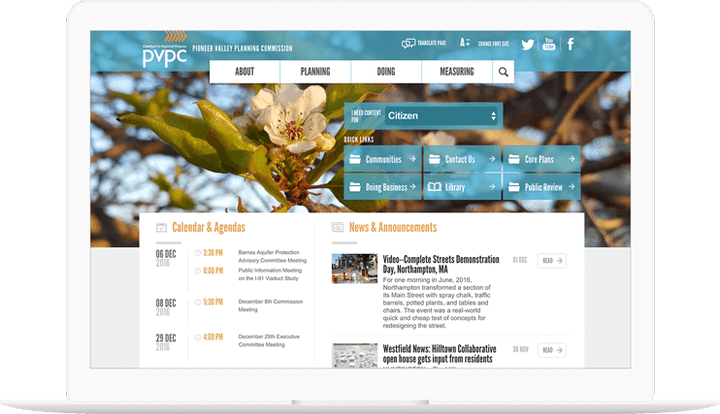

A new design for PVPC.

Queens Library

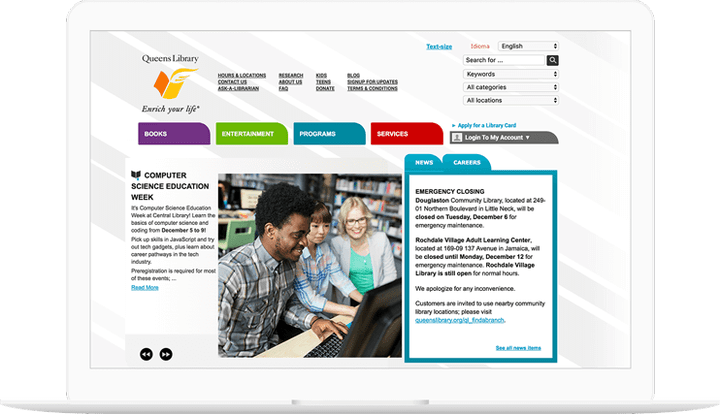

Catalog integration for Queens Library.

Realtime information integrations for better collection interactions.

Catalog integration for Queens Library.

A Hub for Emergency Preparedness.

The Commonwealth of Massachusetts

Best-in-class content delivery and caching.

Longer-lasting caches and fast load times, at a lower cost.

Best-in-class content delivery and caching.

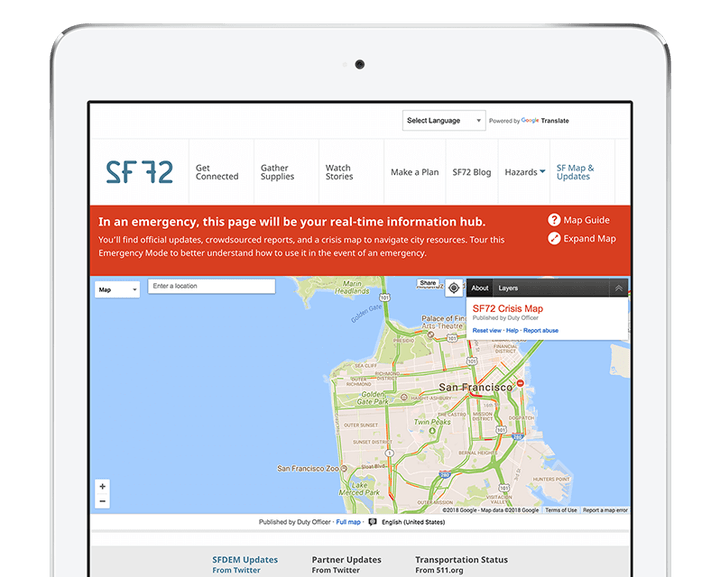

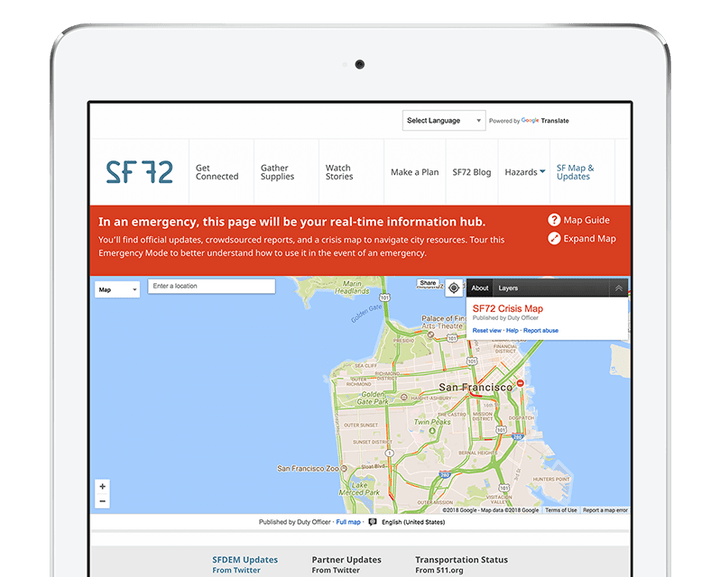

The City and County of San Francisco

Drupal powers San Francisco's accessible emergency preparedness hub.

The Drupal-powered Emergency Management website serves as an accessible resource, providing critical information and tools for emergency preparedness.

Drupal powers San Francisco's accessible emergency preparedness hub.

The Commonwealth of Massachusetts

Ease-of-use API Key Management Tool.

Creating an easy way for the EOTSS to manage API keys.

Ease-of-use API Key Management Tool.

The Commonwealth of Massachusetts

Enabling continuous improvement by listening to constituents.

Collecting and displaying site feedback.

Enabling continuous improvement by listening to constituents.