Anyone involved in delivering public digital services knows the stakes are high. Your platforms need to perform at a high standard 24/7. Interruptions can have serious consequences, impacting lives and putting your organization in the hot seat. At the same time, you have an opportunity to build credibility and public trust by ensuring your site works every time, for everyone.

Government agencies can meet this challenge by shifting from manual, reactive QA to modern testing strategies.

This article will introduce you to the methods we use to get results, including:

- Early, continuous testing driven by automation

- End-to-end testing to validate real-world user flows

- Business simulations to prepare your team for challenges

- Centralized dashboards to provide high observability

This article uses testing approaches implemented for Massachusetts’ Paid Family and Medical Leave (PFML) program as one example of how these strategies can be applied in practice.

Why manual testing alone won’t cut it

Government agencies are in an era of digital transformation — adopting sophisticated technologies like cloud platforms and APIs, and embracing a culture of fast, frequent releases. Manual testing simply can’t keep up. It’s too slow and labour-intensive to comprehensively cover such complex, ever-evolving systems.

Continuing to rely on manual testing is risky. You may be unaware of issues until production or even post-launch. Late-stage defects are more disruptive — often delaying launches, driving up costs and damaging the user experience.

One of the most overlooked benefits of automating tests is how they serve as living documentation of how your system is supposed to behave.

— Angie Jones (@techgirl1908) June 24, 2021

Modern testing is early, ongoing and automated

Your testing strategy should empower you to build, refine, and scale complex systems with speed and confidence. Here are three essential practices that support better development:

- Early testing — also called shift left testing, this brings testing into the earliest phases of development to help you catch issues before they snowball. Early testing lowers risk, reduces QA bottlenecks and minimizes post-launch cleanup.

- Continuous testing — Re-running tests with every code change creates a rapid feedback loop, fostering agility and incremental improvement. Continuous testing is integral to Continuous Integration/Continuous Delivery (CI/CD), a set of best practices enabling faster and more reliable development.

- Automated testing — Automation makes early, continuous testing possible. It rapidly repeats test scripts across different environments and browsers, ensuring comprehensive coverage and accuracy.

Real-life example: automating early detection and regression testing

- The Massachusetts Department of Family and Medical Leave (DFML) contracted Last Call Media to address complex integration requirements for the consistent rollout of features and enhancements for the Paid Family and Medical Leave (PFML) program.

- Early testing enabled us to quickly catch, triage, and assign issues to relevant teams for resolution. Fixes were deployed to lower environments, where automated tests were rerun to ensure fixes wouldn’t break the system or have unintended side effects. This systematic process not only increased the stability of the application, but also nurtured a culture of continuous improvement and quality assurance in the development cycle.

End-to-end testing validates the full user journey

It’s common for teams to test specific aspects of their system, for example:

- Unit testing – validating small, individual pieces of code

- Integration testing – checking cohesion between components

- Performance testing – assessing limits under stress and heavy load

While these tests are important, we need to go further to understand how the system behaves from the user perspective.

End-to-end testing simulates real user journeys from start to finish. It not only verifies functionality and performance, but also validates human-centered design by testing real-world scenarios.

The scenarios should range from common user tasks to more complex flows, offering comprehensive insight into the system’s usability. Accessibility testing should be embedded throughout to ensure an inclusive user experience.

Case study: ensuring smooth user flows from start to finish

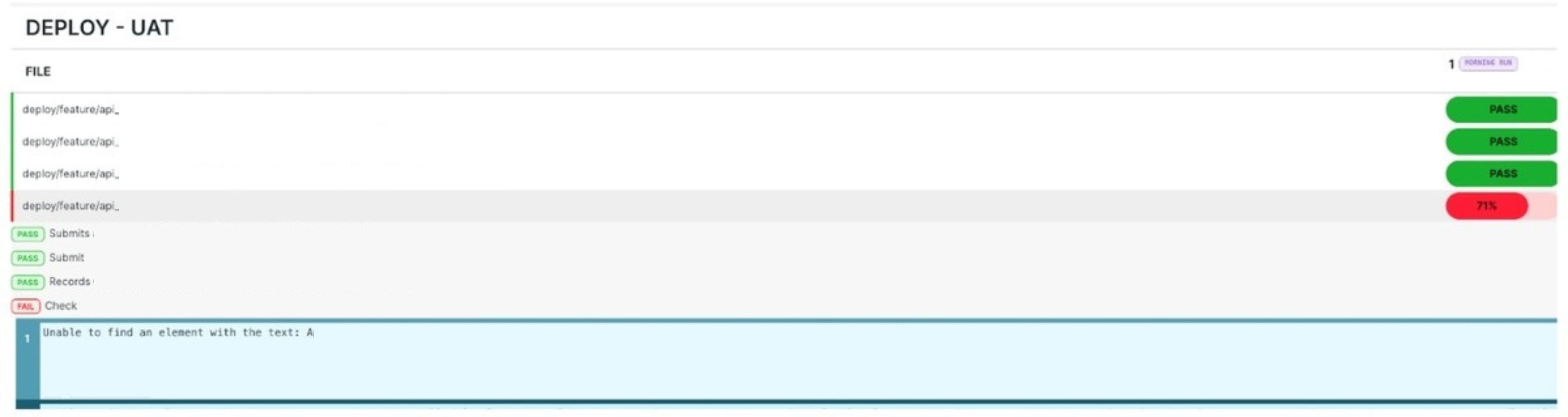

- Massachusetts’ PFML project was inherently complex due to the multi-vendor team working on three distinct components. The user-facing portal, API integration layer, and claims processing system needed to integrate smoothly into a cohesive system.

- The team implemented a robust suite of end-to-end tests covering the main and high-risk features of the application. This approach enabled us to detect and resolve 796 defects — 77% of the total discovered in the program’s first three years. Users were less likely to encounter disruptions as a result, leading to greater trust in the PFML program.

Business simulations prepare your team for any scenario

Even the best-built application isn’t enough on its own — the surrounding human processes must work in sync with the technology. Business simulation provides realistic user data and scenarios to support training and refine workflows. It’s a safe way for teams to explore how the technology fits into their day-to-day work.

Case study: Preparing teams with realistic training data

- Before rolling out the new PFML program, the DFML needed to ensure its call centre teams were ready to handle applications and respond to customer queries. We supported this by generating thousands of synthetic PFML applications in various stages of completion. Practicing with realistic data allowed call centre staff to build knowledge and confidence, ensuring they were ready to support the PFML program from day one.

- As a bonus, working with real-world scenarios revealed bugs that hadn’t been caught during earlier testing. These were triaged and resolved before launch, making the final system more stable and reliable.

Centralized dashboards support informed decisions

Government systems often involve complex integrations, multiple vendors, and a sprawl of tools. When this leads to fragmented views and silos, it’s difficult to make informed decisions or respond quickly to issues.

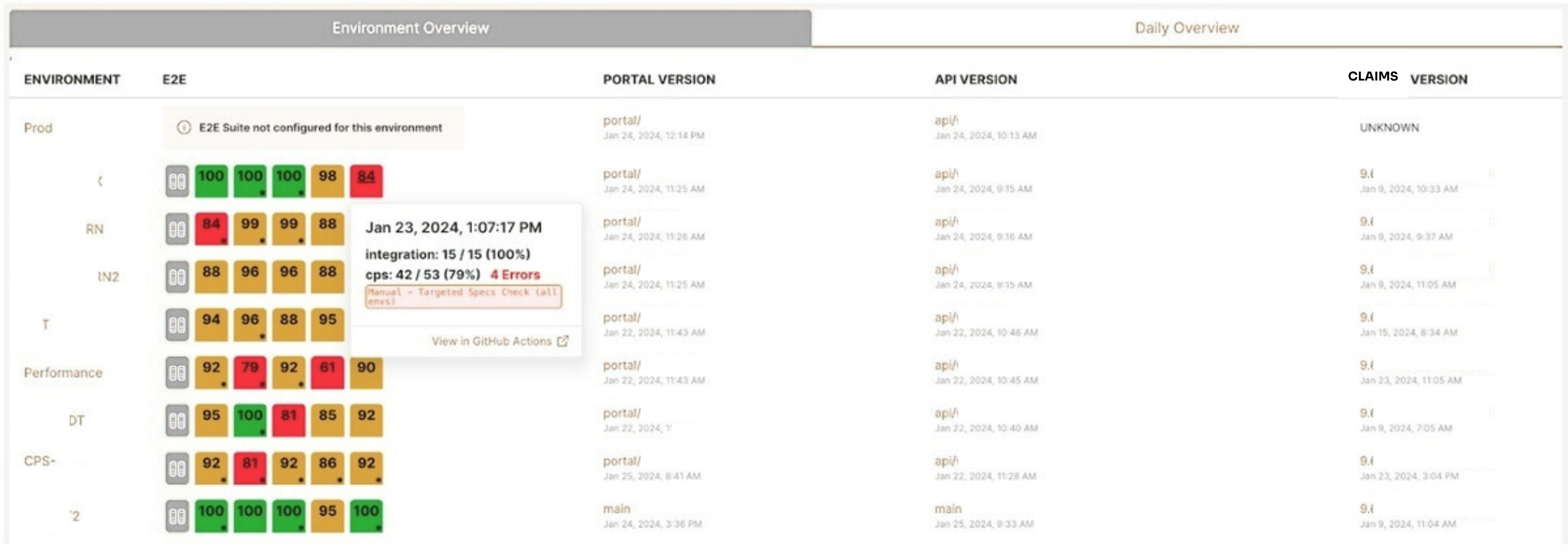

A centralized dashboard gives you a clear picture of your system’s overall health. It enables teams to work collaboratively, respond with speed and clarity, track long-term trends, and uncover hidden vulnerabilities.

Case study: fostering transparency and efficiency with a tailored dashboard

- A customized Observability Dashboard was created, providing DFML staff and other teams with insights into regressions, test failures, environments, versions, and other key aspects of test results and performance. It fostered a culture of transparency and accountability among teams, streamlined issue resolution, and enabled faster releases for MA residents. Custom reporting gave both technical and business stakeholders actionable insights, while the scalable, cloud-based solution provided an alternative to expensive subscriptions.

A final word on testing government digital services

Modern testing is an essential foundation for reliable, efficient digital platforms. The most spectacular visual designs and cutting-edge technologies will fail to impress users if they experience disruptions or errors in your service. Comprehensive testing is always a worthy investment — whether you’re planning a new application, overhauling an existing system, or looking for ways to enhance service delivery.

Ready to replace uncertainty with clarity? Our high-observability dashboards translate complex system data into clear insights that both technical and business stakeholders can act on. We deliver scalable, customized, cloud-based solutions without the overhead of traditional testing infrastructure. Learn more about what we do or get in touch.

This article was created in partnership with our content writer, Peggy McGregor.